Installing Amber on Various Platforms

Learning objectives

Learn how to install amber on various supercomputing platforms

Learn how to make that amber version compatible with FE-Toolkit and AmberFlow

Tutorial

Amber is one of the programs most used, and developed, by our group. Thus, you will likely be installing and using Amber on a wide range of supercomputing environments, each which requires different installation steps.

Setting Up Your Environment (All Platforms)

Before installing amber, you will need to setup your environment, specifically python.

Get Miniforge (a minimal conda installer) from here <https://github.com/conda-forge/miniforge>.

Install it with the following (accept all defaults):

bash Miniforge3-MacOSX-arm64.sh # for Mac M1

bash Miniforge3-MacOSX-x86_64.sh # for Mac Intel

bash Miniforge3-Linux-aarch64.sh # for Linux ARM

bash Miniforge3-Linux-x86_64.sh # for Linux Intel/AMD

Create a mamba environment for amber:

mamba create -n amber "python>3.11" "numpy<2" scipy matplotlib cython rdkit pyyaml -y

mamba activate amber

Clone the amber git repo (you must have access to it).

git clone git@gitlab.ambermd.org:amber/amber.git

mkdir debug_lbsr_dev

cd amber

git switch lbsr_dev

cd ../debug_lbsr_dev

Note

The above uses the lbsr_dev branch; however, you can use any branch you want. Usually, the lbsr_dev branch is the most up-to-date and stable for our purposes.

Installing Amber on Frontera

Get an interactive node

idev -p flex -t 4:00:00 -N 1

Load the required modules

module load gcc/9.1.0 cuda/12.2 cmake/3.24.2 impi/19.0.9

Note

While not explicitly required for amber, we recommend also loading the following modules for fe-toolkit compatibility.

module load mkl/19.1.1

Run CMAKE to configure the build.

cmake ../amber -DCMAKE_BUILD_TYPE=RELEASE -DCMAKE_INSTALL_PREFIX=../install_lbsr_dev -DCOMPILER=GNU -DMPI=TRUE -DCUDA=TRUE -DINSTALL_TESTS=TRUE -DDOWNLOAD_MINICONDA=FALSE -DBUILD_PYTHON=ON -DDISABLE_PBSA_CUDA=ON

Build and install (with parallel make)

make -j 56 install

Note

Installing FE-Toolkit on Frontera

If you want to install FE-Toolkit, use the following commands.

cd ../

git clone git@gitlab.com:RutgersLBSR/fe-toolkit.git

cd fe-toolkit

mkdir debug

cd debug

cmake .. -DCMAKE_INSTALL_PREFIX=../local -DBUILD_PYTHON=TRUE -DPython3_EXECUTABLE=`which python3` -DCMAKE_CXX_COMPILER=`which g++` -DCMAKE_Fortran_COMPILER=`which gfortran` -DCMAKE_CXX_FLAGS="-O3 -DNDEBUG -Wall -Wextra -Wunused -march=native -mtune=native"

make -j 56 install

Now add the following function to your .bashrc file (or an independent script that you can source when needed).

function loadamber() {

module load gcc/9.1.0 cuda/12.2 cmake/3.24.2 impi/19.0.9 mkl/19.1.1

mamba activate amber

export BACKUP_PATH=$PATH

export BACKUP_PYTHONPATH=$PYTHONPATH

source <path to your amber install>/install_lbsr_dev/amber.sh

export PATH="<path to your fe-toolkit dir>/fe-toolkit/debug/bin:$BACKUP_PATH:$PATH"

export PYTHONPATH="<path to your fe-toolkit dir>/fe-toolkit/local/lib/python3.11/site-packages:$BACKUP_PYTHONPATH:$PYTHONPATH"

}

To install amberflow (only if you have access to it).

cd ../

git clone git@gitlab.com:RutgersLBSR/amberflow.git

cd amberflow

open the pyproject.toml file and remove edgembar, since we built it from source.

Then run:

pip install -e .

You should have a working amber installation now!

Installing Amber on Vista

Get and interactive node

idev -N 1 -p gg -t 4:00:00

Load the required modules

module load nvidia/24.7 openmpi/5.0.3 cuda/12.4 nvidia_math/12.4 nccl/12.4 gcc/13.2.0 cmake/3.31.5

Note

While not explicitly required for amber, we recommend also loading the following modules for fe-toolkit compatibility.

module load nvpl

Run CMAKE to configure the build.

cmake ../amber -DCMAKE_BUILD_TYPE=RELEASE -DCMAKE_INSTALL_PREFIX=../install_lbsr_dev -DCOMPILER=GNU -DMPI=TRUE -DCUDA=TRUE -DNVIDIA_MATH_LIBS=${TACC_NVIDIA_MATH_LIB} -DINSTALL_TESTS=TRUE -DDOWNLOAD_MINICONDA=FALSE -DBUILD_PYTHON=ON -DDISABLE_PBSA_CUDA=ON

Build and install (with parallel make)

make -j 56 install

Note

Installing FE-Toolkit on Vista

If you want to install FE-Toolkit, use the following commands.

cd ../

git clone git@gitlab.com:RutgersLBSR/fe-toolkit.git

cd fe-toolkit

mkdir debug

cd debug

cmake .. -DCMAKE_INSTALL_PREFIX=../local -DBUILD_PYTHON=TRUE -DPython3_EXECUTABLE=`which python3` -DCMAKE_CXX_COMPILER=`which g++` -DCMAKE_Fortran_COMPILER=`which gfortran` -DCMAKE_CXX_FLAGS="-O3 -DNDEBUG -Wall -Wextra -Wunused -march=native -mtune=native"

make -j 56 install

Now add the following function to your .bashrc file (or an independent script that you can source when needed).

function loadamber() {

module load nvidia/24.7 openmpi/5.0.3 cuda/12.4 nvidia_math/12.4 nccl/12.4 gcc/13.2.0 cmake/3.31.5 nvpl

mamba activate amber

export BACKUP_PATH=$PATH

export BACKUP_PYTHONPATH=$PYTHONPATH

source <path to your amber dir>/install_lbsr_dev/amber.sh

export PATH="<path to your fe-toolkit dir>/fe-toolkit/debug/bin:$BACKUP_PATH:$PATH"

export PYTHONPATH="<path to your fe-toolkit dir>/fe-toolkit/local/lib/python3.11/site-packages:$BACKUP_PYTHONPATH:$PYTHONPATH"

}

To install amberflow (only if you have access to it).

cd ../

git clone git@gitlab.com:RutgersLBSR/amberflow.git

cd amberflow

open the pyproject.toml file and remove edgembar, since we built it from source.

Then run:

pip install -e .

Installing Amber on Amarel

Compiling amber for development

We’ll make a few changes on some of the CMakeLists.txt to ease the development and building processes.

Goals

Allow newer CUDA versions (you likely have a recent CUDA toolkit).

Enable debugging statements.

Turn off RISM’s CUDA implementation.

CMake scripts to edit

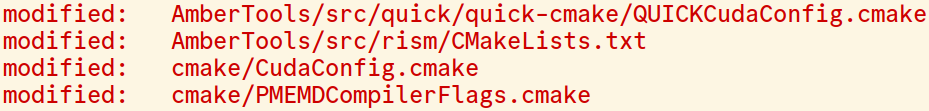

These are the files we’ll edit:

AmberTools/src/quick/quick-cmake/QUICKCudaConfig.cmake

At line 113, change:

elseif((${CUDA_VERSION} VERSION_GREATER_EQUAL 12.8) AND (${CUDA_VERSION} VERSION_LESS_EQUAL 12.8))

to:

elseif((${CUDA_VERSION} VERSION_GREATER_EQUAL 12.8) AND (${CUDA_VERSION} VERSION_LESS_EQUAL 13.8))

AmberTools/src/rism/CMakeLists.txt

At line 150, change:

if(CUDA) ## float version

to:

if(FALSE) ## float version

cmake/CudaConfig.cmake

At line 80, change:

elseif((${CUDA_VERSION} VERSION_GREATER_EQUAL 12.7) AND (${CUDA_VERSION} VERSION_LESS 12.9))

to:

elseif((${CUDA_VERSION} VERSION_GREATER_EQUAL 12.7) AND (${CUDA_VERSION} VERSION_LESS 13.9))

At line 82, replace SM89FLAGS with the one corresponding to your GPU, then change:

list(APPEND CUDA_NVCC_FLAGS ${SM70FLAGS} ${SM75FLAGS} ${SM80FLAGS} ${SM86FLAGS} ${SM89FLAGS} ${SM90FLAGS} ${SM100FLAGS} ${SM120FLAGS} -Wno-deprecated-gpu-targets -Wno-deprecated-declarations -std=c++14)

to:

list(APPEND CUDA_NVCC_FLAGS ${SM89FLAGS} -Wno-deprecated-gpu-targets -Wno-deprecated-declarations -std=c++14)

At line 87, after the previous long if-else, add:

list(APPEND CUDA_NVCC_FLAGS -g -G -keep --source-in-ptx)

cmake/PMEMDCompilerFlags.cmake

At line 299, at the bottom just above the MKL config, add:

set(PMEMD_NVCC_FLAGS -O0 -g -G -keep --source-in-ptx)

HPC-SDK interaction

If you have the NVIDIA HPC SDK installed and it gets found before the CUDA toolkit, the libraries might be detected incorrectly. Point CMake to the CUDA installation using the CUDA_TOOLKIT_ROOT_DIR option. Eg:

cmake ../amber -DCMAKE_BUILD_TYPE=DEBUG ... -DCUDA_TOOLKIT_ROOT_DIR=/usr/local/cuda